The proposal to "freeze" the development of new versions of GPT and their analogs has become one of the most discussed topics today. The rationale for this proposal was the fear that too intelligent systems would lead to nothing less than the death of mankind. Sounds very serious, doesn't it - especially given the names of those who agree with the proposal.

The previous chapter AGI: IMPLICIT ASSUMPTIONS discussed why actual intelligence cannot be expected from systems based on neural networks. Theoretical limitations, however, are viewed with disbelief by even a highly educated public when opposed by a practical demonstration of "intelligent behavior." In the case of GPT, behavior is understood as the generation of reasonable texts. If the system tells us what we agree with, we will recognize it as reasonable. This approach to evaluation has a natural flaw: it blurs the difference between the ability to remember the correct text and then reproduce it (which is undoubtedly necessary for an intelligent system) with the ability to reason logically, which is absolutely necessary for recognizing the presence of intelligence.

Therefore, for a realistic assessment of the intellectual capabilities of any system, tests are needed, for which more than the ability to memorize and reproduce what has been learned is required. Let's use this rule and compare GPT with a parrot in the video:

https://www.youtube.com/watch?v=hZCupKo6aFg

The parrot in the video solves some rather non-trivial tasks (not every child can cope with them). At the same time, he does not need to demonstrate the ability to reproduce memorized texts - something completely different is required. He must experiment, try various actions, evaluate the result, and thus find the sequence of steps that leads to the desired result.

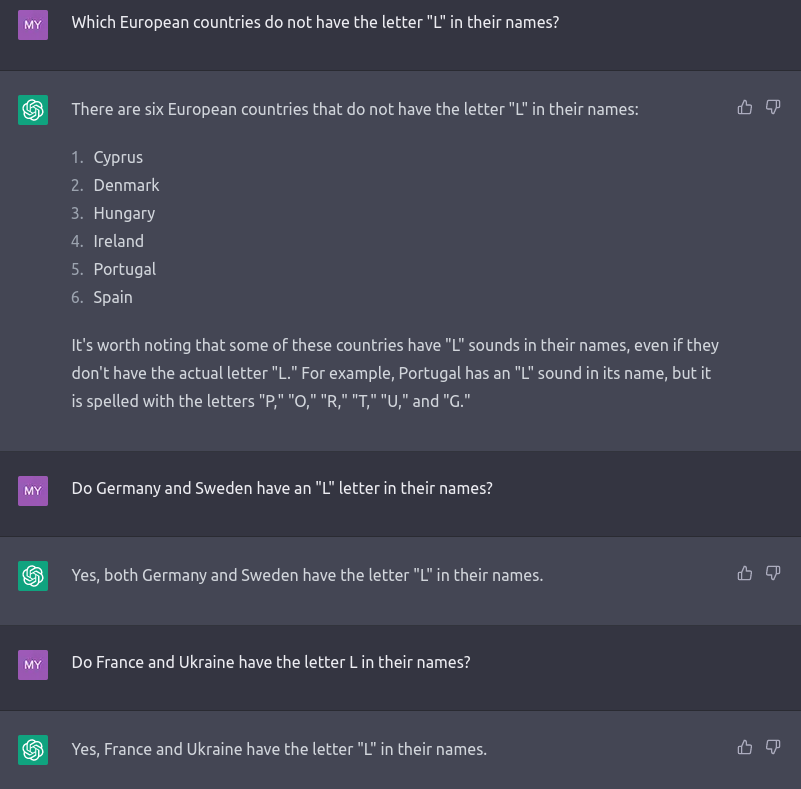

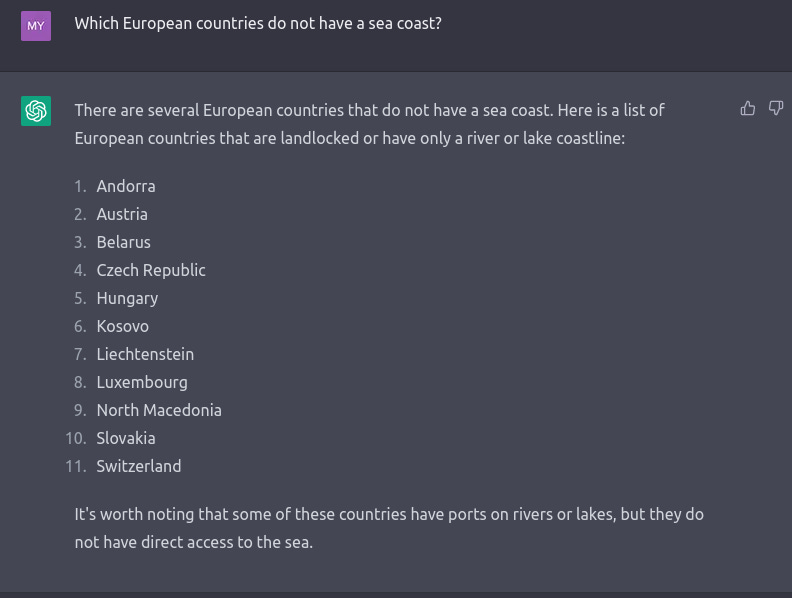

Compare this with what we have in the case of GPT. The results below are obtained on GPT3.5, but GPT4 differs not in principle but only in memory size, so "patching holes" in one place does not eliminate others (in fact, it contributes to their creation). Here is the first example:

Statements are reproduced that are pretty relevant to the request. Still, the system does not detect their apparent inconsistency in any way. Is it a coincidence? Here is the following example:

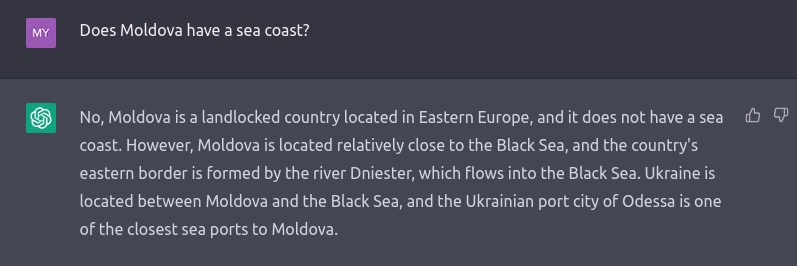

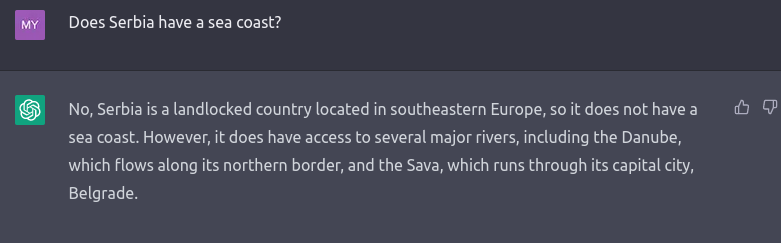

Everything is the same: both the fallacy of the statements and their internal inconsistency are apparent. These are not errors in the conclusions - this is the absence of them. When GPT includes in the resulting text a story about how the result was obtained, this is not a statement of the course of inferences in the process of constructing an answer, but only fragments of texts on which the system was "trained" - therefore, pieces with mutually exclusive statements quietly coexist in the response, being not detected in the process of reasoning due to the absence of such. As a rule, fragments that do not relate to the essence of the question also get in response - for example, when it comes to the presence of a sea coast, they will tell us about the presence of rivers - all for the same reason: there is an extraction of the text on the topic, but there is no reasoning:

The above examples explain the reason for the radically inflated estimates of the intellectual abilities of Large Language Models: inaccurate selection of tests. Engineering practice has shaped the rules for adequate testing for centuries; The basic idea behind functional tests is to reveal the system's limits under test. The situation is reversed in the case of GPT and their analogs: developers demonstrate only what is done correctly, and there is no independent testing system (unlike automotive, aviation, and other "established" technologies). Panic about the intelligence of GPT and peers - which is not really present - is one result of this situation.

The absence of actual intelligence (and, along with it, the intentions and the ability to act purposefully) does not mean the system's safety, of course - but it means the absolute absence of an existential danger to humanity. The possibility of involuntary (let us recall, the lack of intelligence also implies the absence of intentions) disinformation due to the specifics of the system, as well as the possibility of purposeful dissemination of disinformation by the owner of the GPT analog, is not a new danger to humanity: disinformation has existed and has been used since the appearance of homo sapiens.

No less surprising is the very idea of suspending development because, for many undeniable reasons, it is unrealizable. No ban can stop developments in countries that find it helpful to continue them (and there are such countries). And no prohibition can be effective if there is no possibility to detect violations - and there are absolutely no such possibilities. The principles are known, computer capacities are everywhere, and it is easy to remove all mention of artificial intelligence from the names of projects. Politicians, economists, psychologists, and military people know this very well.

Finally, the last stone in the direction of the new Luddites of the XXI century - from biologists. The situation with the emergence of more intelligent than the most intelligent of the existing inhabitants of the planet is not a new phenomenon but a permanent process. The appearance of animals, for example, did not lead to the distinction of plants; everything is precisely the opposite - the presence of plants is the key to the possibility of the existence of animals. And the appearance of homo sapiens does not result in the destruction of less intelligent animals (for example, mosquitoes) and plants.

Therefore, it is reasonable not to try to do the impossible but to do something useful and quite possible - to create a system of independent testing of systems that claim to have intellect.