ChatGPT IN PROGRAMMING

LIMITS OF CAPABILITIES

In contrast to the usual order of presentation, here, the final conclusion precedes its justification and supporting examples; this allows those who agree with the opinion not to waste time in vain.

Some of the publicly expressed assessments are both enthusiastic about the ChatGPT being, in fact, the first version of Artificial General Intelligence and fears that ChatGPT will leave programmers out of work. Our experiments aimed at finding the limits of ChatGPT's capabilities (rather than confirming the advertised capabilities) are disappointing for the former and reassuring for the latter:

ChatGPT is a helpful tool for programmers skilled enough to verify and modify generated code, but at the same time, ChatGPT lack even rudimentary intelligence. ChatGPT operates exclusively on texts: it looks for something associated with the request, including code and explanations. It can "seamlessly" stitch fragments of texts but cannot use logical reasoning or detect contradictions and absurdities in the generated output.

The facts found in the experiments lead to this conclusion:

Formally correct code can be irrational. Suppose several related functions are requested, such as finding the minimum, maximum, and average of a series of given values. In that case, the code will contain three successive scans of the entire series, although the result can be obtained by a single scan. This is because ChatGPT can concatenate appropriate code/text fragments but cannot use other construction methods since they require a logical analysis of the combined pieces. Another common form of illogicality is the presence of unnecessary statements, the omission of which simplifies the code without changing the result.

The generated code and its explanations may contradict each other, and the explanations may be erroneous. For example, the code for generating sin(x)/x values is correct, but the comments to it, which give the supposedly expected results of the program, are incorrect: they are generated independently of each other, and the explanations are based on erroneous statements.

Since most of the code used in the training set is illustrative in nature and original purpose, there are no checks and exception handling; accordingly, it is missing from the code generated by ChatGPT. Therefore, for practical use, a programmer should understand what and where should be added to the code to be suitable in practice.

Python-generated code is often less neat and efficient than C++ code generated for the same task. Transferring knowledge learned from training data set in one programming language to code in another programming language requires logical analysis, which ChatGPT is not capable of; as a result, ChatGPT replicates the higher skill of average C++ programmers compared to intermediate Python programmers.

ChatGPT can generate a unit test for its code; however, the generated tests do not check all apparent aspects, i.e., tests are incomplete (especially if there is more than one variable test parameter and, accordingly, various combinations of them must be tested).

ChatGPT cannot respond to user prompts to correct the generated code using logic: it corrects what is referred explicitly and leaves unchanged what is obviously related to the first. For example, ChatGPT generates code to process a large number of numbers from a file. The entire content of the file is entered as an array of strings, and then the strings are converted to numbers. To the remark that all numbers may not fit in RAM, the code is modified: the array of numbers is eliminated, and numbers are processed immediately after the string is converted into a number - but the array of strings remains in the code. It is eliminated only after indicating that this array can be unacceptably large too.

ChatGPT is formally capable of doing user-submitted code validation. However, our tests have shown the practical unsuitability of this functionality (an example is given below).

The capabilities of ChatGPT allow us to use it. Still, it prevents non-programmers from using ChatGPT outside of doing programming exercises and generating "smart calculator" style mini-programs.

We performed dozens of simple tests, most of which ChatGPT generated correct (though not always rational) code. But the limits of applicability are nevertheless determined by the presence of situations where the generated code and/or text was erroneous. Here we confine ourselves to two cases that show the limits well:

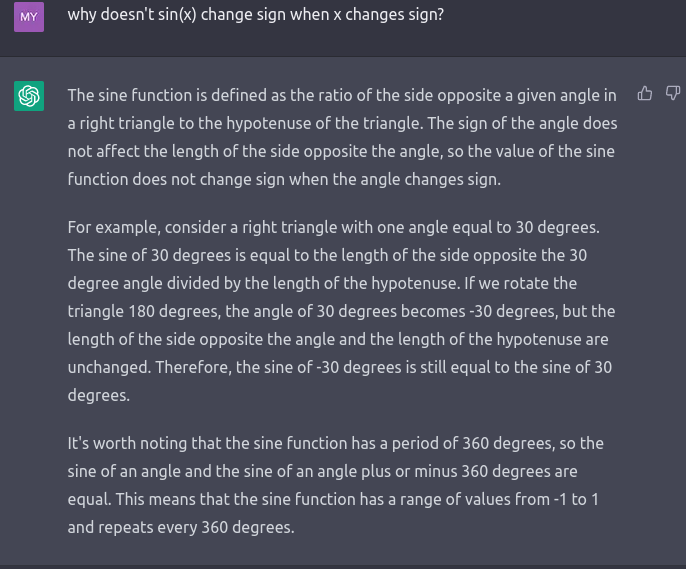

ChatGPT states in one sentence that sin(x) does not change sign when x changes sign, and in the following sentence, it says that sin(x) takes values from -1 to +1:

ChatGPT checks the C++ function code with a little incorrectness: the sum of integers is accumulated in a non-negative type variable "unsigned s" (which, however, does not change the result). ChatGPT claims that there are 4 errors, and all four statements are wrong. In conclusion, he gives his code version, preserving the original sloppiness, which is incorrect in a more profound way - the last number of the result is printed twice:

Interesting results can also be found in

Is ChatGPT Really a `Code Red` for Google Search?

by Gary Marcus.