AGI: OBJECT` TRACKING

ONE TERM, MANY MEANINGS

Tracking the movement of objects is not a new challenge; new technologies based on neural networks have only expanded the already wide range of approaches used.

Approaches used, descriptions of which are publicly available can be classified as follows:

Tracked objects have a specificity known in advance (as a rule, color), which makes it possible to find them on the current frame using the simplest algorithms. A typical application is school robot competitions.

Tracked objects are image areas whose integral change (brightness and/or color) from one frame to another exceeds a certain threshold. A typical application is security systems.

Tracked objects are identified by the neural network. In the current frame, objects are searched for, which the neural network is trained to recognize.

In all three cases, detecting tracked objects does not consider the continuous movement of objects in space. The most noticeable consequence is the difficulty in analyzing the situation when more than one object of the same type is found in two consecutive frames.

Tracking involves obtaining information about each tracked object's sequence of positions (coordinates). Suppose there are several objects of the same type. In that case, the problem of finding a match between objects on different frames arises - to identify objects, other factors must be considered in addition to the type of object. For example, several balls of the same color in case (1) or several people in point (3). The situation becomes even more complicated when the identification of an object by a neural network is unstable. For example, a pedestrian leading a bicycle is recognized as a person in one frame and a bike in another. No easier is the situation when two pedestrians in similar clothes walk in opposite directions and switch places on two frames.

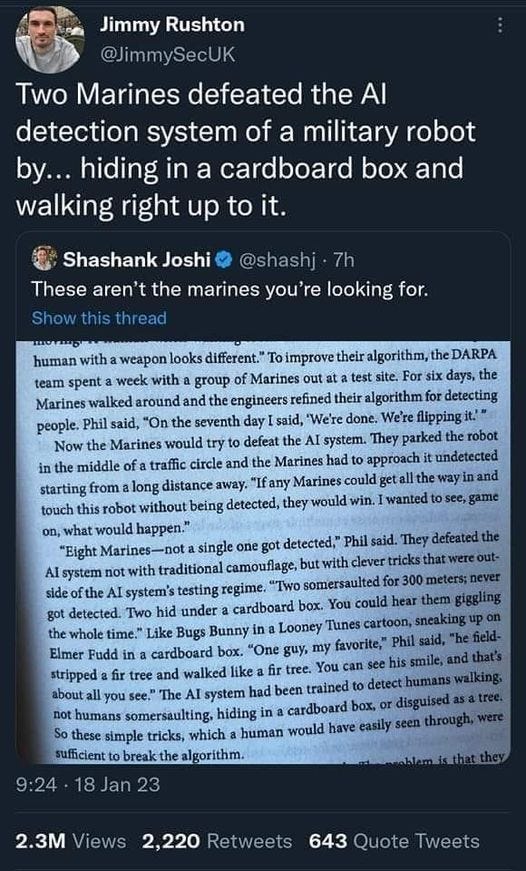

Naturally, if the object presented in the frame is not recognized by the neural net, it is impossible to detect it.

The described difficulties have quite tangible consequences in practice - in particular, in the operation of autonomous driving systems of cars and in the situation described below:

However, a significantly different approach, implemented in nature, is possible: first, an object is detected solely because it is moving; its movement is tracked, and only then is the thing identified, if possible. And when identification is impossible, the object's movement is monitored, and decisions are made based on the trajectory of motion, speed, and size. In the general case, this approach makes it possible to detect changes in time in coordinates and angular size and orientation in space.

The process is based on the continuity of changing the position and orientation of objects, so there is no problem with finding the correspondence of objects on consecutive frames and no concern about tracking unknown or uncertainly identified objects.

As in other cases, there is also the other side of the coin: static objects that do not inherently require tracking cannot be detected - more precisely, everything that is stationary in the field of view is perceived as one global background object. If components of a static environment need to be recognized, this should be done independently by other means - but there is no need for tracking due to their static nature.

The implementation of the described approach is based on the principles described in the chapter AGI: STRUCTURING THE OBSERVABLE.

The details of the approach will be described in one of the following chapters.