AGI: ARTIFICIAL NEURAL NETS

ENGINEERING POINT OF VIEW

Popular science articles and news from artificial intelligence, Deep Learning, Large Language Models, GPT, and so on, voluntarily or unwittingly, contribute to the spread of an inadequate understanding of neural networks. This chapter discusses aspects rarely mentioned in popular sources of information and interfere with a realistic assessment of the situation.

The tone and number of publications about software tools based on neural networks can give the impression that this is a very complex technology, in essence, based on some new ideas. In reality, everything is precisely the opposite. The concept of neural networks has been known for about half a century and is very simple in essence, and the program code that implements a functioning neural network takes about a page of code. Why have tools based on neural networks only become practically used in the last decade? The reason for this is the other side of the coin: the simplicity of the idea is offset by the need for enormous computational resources to provide valuable results in practice.

From a mathematical point of view, a neural network is an ordinary function, which is similar to the sin (x) function or a function that determines the shortest path between two points on the earth's sphere:

Using a neural network, like any function, comes down to setting arguments and calculating the result. If the software tool is capable of more, then the neural network does not implement these additional features; some other things are used along it.

From a programming point of view, a neural network is an enormous expression. Implementation complexity is determined solely by quantitative factors: the number of constants in this function/expression was measured in thousands, then millions, and today it reaches billions.

A valuable use of a neural network requires constructing an expression of such a structure that can provide the desired results and then assigning values to all constants of this expression.

The expression structure is given by the structure of the neural network (directed graph), which is converted into a sequence of elementary operations for calculations.

Proven ( see Universal approximation theorem ) that a neural network as a category of objects is an ideal approximator: any function can be represented by a specific neural network, providing a calculation with the required accuracy. Wonderful, isn't it? But there is a nuance: the corresponding theorem does not give any hints as to what the structure of the neural network should be for a particular task and what the constants' values should determine the calculation's desired result. Therefore, the degree of success of using a neural network radically depends on what structure of the neural network is chosen and what method of selection of constants is used.

Choosing the structure of a neural network is an informal task and comes down to trial and error and the use of structures that have proven to be helpful in similar tasks; in terms of the combination of methods, this is a modern analog of alchemy.

The term "selection of constants" is not used by chance. In contrast to the "classical" methods of approximation, where the constants of the approximator function are calculated by solving a system of equations, in the case of neural networks, it is the selection that takes place; the selection process got inherently slangy name "neural network training". The choice of the values of a gigantic set of constants naturally requires equally tremendous computational resources. Reducing the time of the selection of constants ("training") process dictates the use of supercomputers, GPU/TPU, which in turn increases the complexity and size of the code that implements this selection.

The process of selecting the values of the constants is based on the estimation of the integral value of the errors. The integral estimate analyzes the difference between the expected values and what the tested set of constant values gives. The error size is calculated for each sample from the "training" data set, and then the quality of the set of constants is evaluated. Thus, selecting constants ("training" a neural network) is the task of minimizing the approximation error. The minimization problem is usually reduced to a step-by-step correction of the values of the constants that reduces the integral error (using, for example, gradient descent). The process is very costly, which, along with the high labor intensity of preparing "training" data, makes the process expensive.

The problem not emphasized in publications devoted to the technology of neural networks (Deep Learning, LLM, etc.) is that for complex applications, the task of minimizing the integral error by choosing constants is multi-extremal. Stepwise improvement leads to one of many extremums, the number of which is unknown, and which one depends on the starting set of constants (and partly on the method of stepwise improvement: some large steps can lead from the neighborhood of one minimum to the neighborhood of another). In most cases, the recommendations come down to the fact that you need to choose the initial set at random and repeat the selection/training process for several starting combinations. However, it seems more reasonable to organize the selection in two stages: in the first phase, many random combinations are tested constants, and the combination with the smallest integral error is selected, which is then improved step by step in the second phase of the process.

Another problem is that the integral estimate of the error (scalar value) may not be adequate for the essence of the problem; two different sets of constants with the same value of the integral error will produce the most sensitive errors in different situations. Which option is preferable should be clarified using additional considerations.

Those who are interested in "playing around" with neural networks can use our straightforward implementation, which is convenient for experimentation (single C ++ header file): https://github.com/mrabchevskiy/neuro

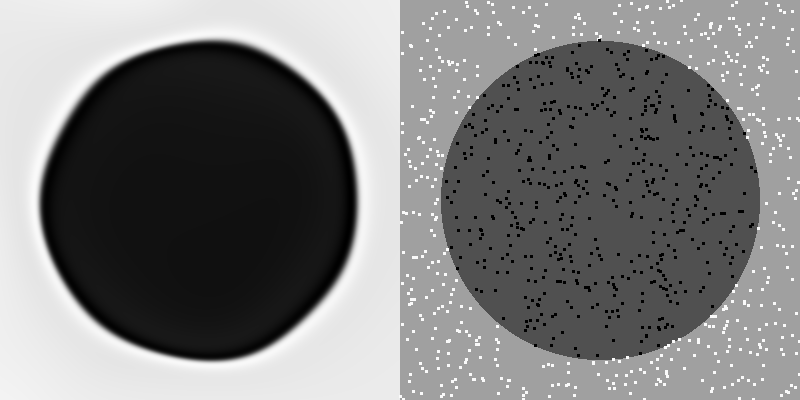

The pictures below show the results of an unsuccessful and successful selection of the starting constant combination for the problem of neural network approximation of a Boolean function of two variables:

f(x,y) = ( x*x + y*y ) > R*R

Which is equal to 1 outside the circle of radius R and 0 inside it:

The neural network configuration, including the values of the constants, is saved in the file (and loaded from the file); in the case of a two-dimensional task, images are rendered using the cross-platform SFML package. Details are in the comments inside the code.

In conclusion, the described option for choosing constants with an unchanged function structure (which makes neural networks similar to classical approximation methods) is not the only possible approach. Alternative approaches based on function structure selection can be found in the chapter AGI: STRUCTURES DISCOVERING of this series and in speeches and publications by Arthur Franz: Experiments on the generalization of machine learning - AGI-21 Conference.